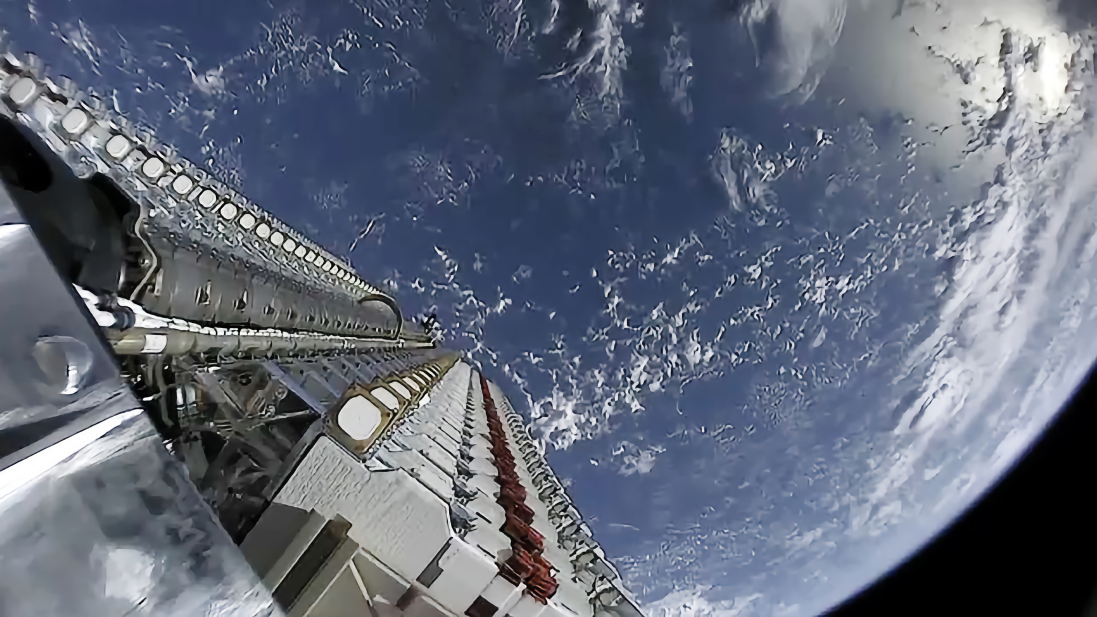

Now, Musk sees a chance to bring this vision to life. His company, SpaceX, has applied for regulatory approval to build solar-powered orbital data centers, potentially spanning up to a million satellites, capable of moving as much as 100 GW of computing power off Earth. He has even suggested that some of these AI satellites could be constructed on the moon.

“In three years or less, the most cost-effective location for AI will be space,” Musk said last week on a podcast hosted by John Collison.

He isn’t the only one pursuing this idea. xAI’s head of compute reportedly wagered with his counterpart at Anthropic that 1% of the world’s compute capacity will be in orbit by 2028. Meanwhile, Google backing from Google and Andreessen Horowitz, recently submitted plans for an 80,000-satellite constellation. Even Jeff Bezos has described orbital AI as the future.

But beyond the excitement, what does it actually take to deploy data centers in space?

Preliminary analysis shows that current Earth-based data centers are still more cost-effective than orbital ones. Space engineer Andrew McCalip created a calculator comparing the two, with baseline estimates indicating that a 1 GW orbital data center could cost $42.4 billion—nearly three times the cost of a terrestrial equivalent—due to the upfront expenses of building and launching satellites.

Experts say making orbital data centers economically viable will require advances across multiple technologies, enormous capital investment, and extensive work on supply chains for space-grade components. The economics will also hinge on rising terrestrial costs as demand strains resources and supply chains on Earth.